The Problem

- Uncontrolled AI/LLM API costs spiraling out of control with no visibility into usage or spend

- Sensitive data being sent to external LLM providers (OpenAI, Anthropic) without PII/PHI detection

- No rate limiting, caching, or fallback strategies for AI API calls causing reliability issues

- Lack of observability into LLM performance, quality, and prompt effectiveness

- Compliance and governance gaps for AI usage (GDPR, HIPAA, SOC 2) with no audit trail

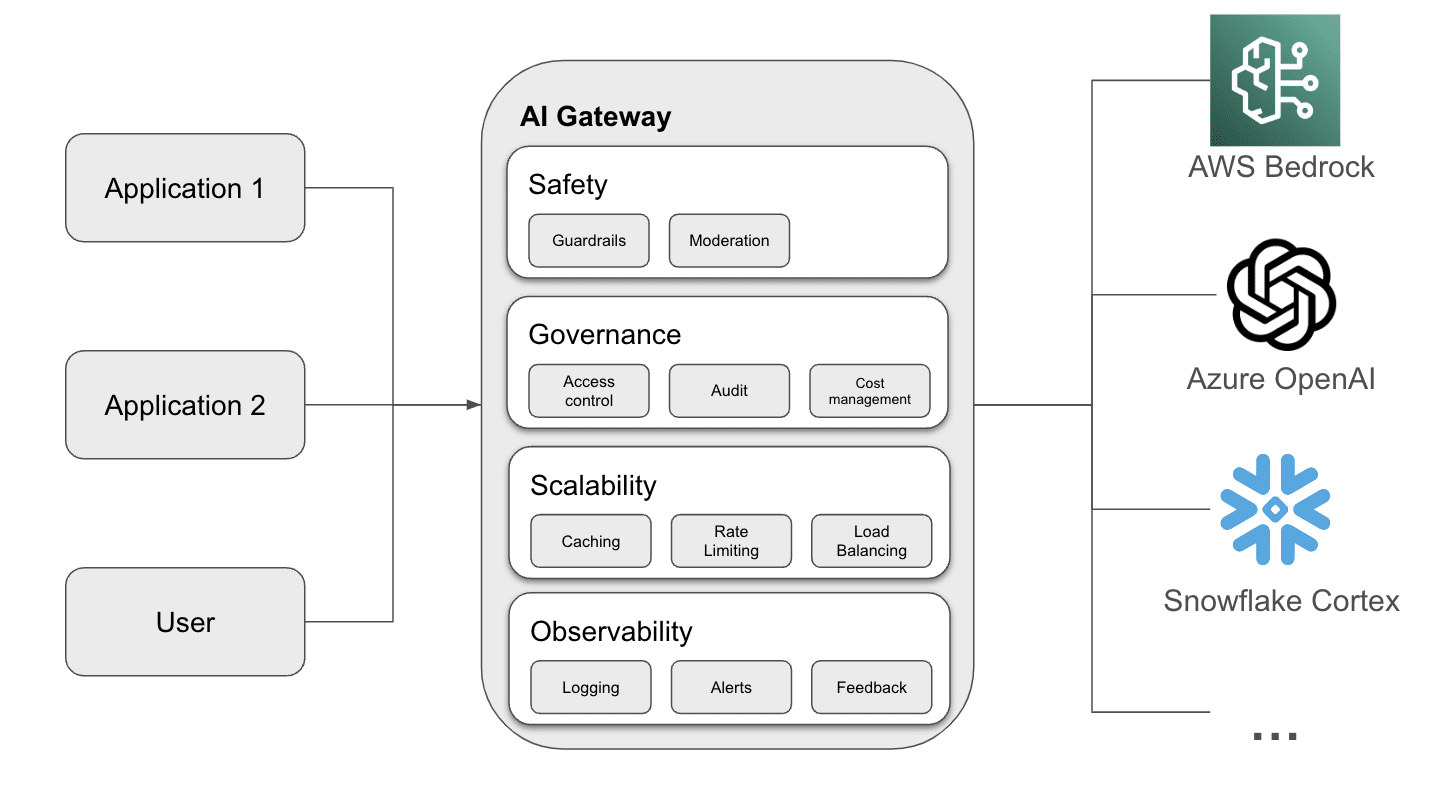

Our Solution

LLM Cost Management

Per-user, per-team budgets and real-time cost tracking with automatic spend limits

Data Loss Prevention (DLP)

Automated PII/PHI/secrets detection and redaction before sending to LLM providers

Intelligent Caching & Routing

Semantic caching to reduce costs and multi-provider routing for reliability and cost optimization

Prompt Management & Security

Centralized prompt templates, versioning, and injection attack prevention

Observability & Analytics

Detailed metrics on latency, cost, quality, and usage patterns for LLM calls

Compliance & Audit

Complete audit logs, data residency controls, and compliance reporting for AI usage

What's Included

Discovery & Requirements

Week 1-2- Current AI/LLM usage audit across organization

- Cost analysis and optimization opportunities

- Security and compliance requirements assessment

- Gateway architecture design and tool selection

- Data sensitivity classification

Gateway Implementation

Week 3-8- AI Gateway deployment (Kong, Apigee, or custom)

- Multi-LLM provider integration (OpenAI, Anthropic, Azure OpenAI, etc.)

- Authentication and authorization setup

- Rate limiting and quota management

- Semantic caching layer implementation

- DLP and PII detection integration

Security & Compliance

Week 9-12- Prompt injection attack prevention

- Data residency and regional routing

- Audit logging and SIEM integration

- Compliance policy enforcement (GDPR, HIPAA)

- Prompt template management system

- Cost allocation and chargeback setup

Observability & Training

Week 13-14- Metrics and analytics dashboard setup

- Cost tracking and alerting

- Quality and performance monitoring

- Developer training on gateway usage

- Best practices documentation

- 30-day post-launch optimization support

Return on Investment

40-60% Cost Savings

Through intelligent caching, routing, and usage optimization

100% Data Protection

Automated PII/PHI detection prevents sensitive data leaks

99.9% Uptime

Multi-provider failover ensures AI service reliability

Investment

Starter

$20,000

Basic AI gateway for small teams

- Single LLM provider integration

- Basic cost tracking and limits

- Rate limiting and caching

- Standard authentication

- Usage analytics

- 6-8 week timeline

Most Popular

Professional

$40,000

Full-featured AI governance

- Multi-LLM provider support

- Advanced DLP and PII detection

- Semantic caching layer

- Prompt management system

- Compliance controls (GDPR, HIPAA)

- Cost allocation and chargeback

- Observability dashboard

- 10-12 week timeline

Enterprise

$65,000+

Enterprise AI infrastructure

- Multi-region gateway deployment

- Custom LLM integrations

- Advanced security controls

- Fine-tuned model support

- Self-hosted LLM integration

- Advanced analytics and ML ops

- 24/7 support

- Dedicated AI architect

- 12-16 week timeline

Ready to Secure Your AI Infrastructure?

Schedule a free 30-minute consultation to discuss your AI gateway needs